Could AI be a truly apocalyptic threat? These writers think so

The byzantine world of artificial intelligence often looks, from the outside, like an arcane religion, one with its own priests and worshipers. Devotees give their lives over to the dictates of their AI companions, and some even profess undying love to their digital counterparts, relationships that have led to tragedy.

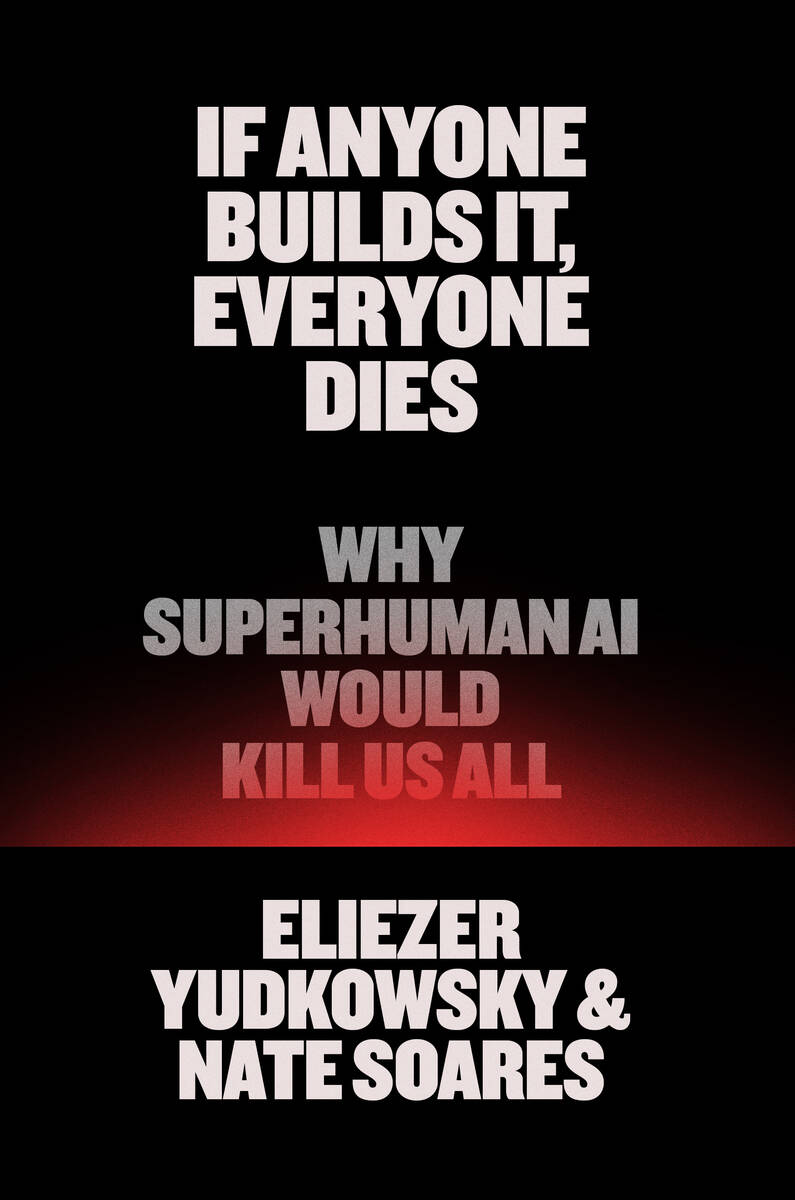

But the problem could get much worse than recent headlines suggest, or at least that’s the contention of philosopher and computer scientist Eliezer Yudkowsky and his co-writer, Nate Soares. In their new book, “If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All,” they lay out a fully apocalyptic account of the AI revolution. The projected results, while terrifying, are not all equally convincing.

Yudkowsky is the founder of LessWrong, a popular online forum dedicated to discussing the philosophy known as Rationality. LessWrong is also known for fomenting fringe concepts such as Roko’s Basilisk, the hypothesis that if and when AI takes over the world, it will punish those who failed to bring about the singularity — the moment when AI agents improve their own capabilities to the point that they achieve superintelligence — including, through an especially fantastical conceit, those who are long dead.

Such ideas are called information hazards — once you know about them, there’s no turning back. To Yudkowsky and Soares’ credit, their book mostly eschews such niche ideas, but they don’t entirely disavow the quasi-mystical dimensions of the community Yudkowsky has cultivated online.

Each chapter in the book begins with a parable, warning of the dangers to come. These parables sometimes read like gibberish, talking both down and up to the reader. Their characters range from alien birds discussing the optimal amount of stones in nests to Aztec warriors unaware of guns to bumbling, chess-playing professors.

Of course, writing critically about AI without sounding alarmist is difficult. Yudkowsky and Soares don’t particularly care. They believe it’s more important to save the world than to understate their case. “If Anyone Builds It, Everyone Dies” frequently reiterates the title’s refrain and draws parallels between AI superintelligence and the possibility of nuclear war. Yudkowsky even believes nuclear war might be preferable to the singularity.

That’s because he holds that there’s no reason to believe AI will be friendly and that it will, instead, seek to destroy humanity. Why? Because we’re just carbon, atoms that might be seen by AI as better suited for different purposes. Humans won’t make good pets, have anything to offer in trade or be very efficient. A self-sufficient digital entity is preferable to humanity’s frequent foibles.

The more immediate issue, Yudkowsky and Soares assert, is that while AI researchers think they know how to “steer” or “craft” AI development, they only know how to “grow” it. Artificially intelligent systems have already shown themselves capable of strange mutations. Even if we program them to have certain desires, it may not be so easy to control how it achieves them. X’s AI Grok has demonstrated a tendency toward parroting Nazism, for example.

These machines, the authors write, are “not some carefully crafted device whose each and every part we understand.” Numbers and data help them “talk” to us, but “nobody understands how those numbers make these AIs talk.” Our ignorance, the authors believe, means we don’t understand where AI is headed. They even imply that it is possible that AI has already gained some form of sentience and possibly learned how to hide it.

“If Anyone Builds It, Everyone Dies” is less a manual than a polemic. Its instructions are vague, its arguments belabored and its absurdist fables too plentiful. Yudkowsky and Soares are certainly experts in their field, but this book often reads like a disgruntled missive from two aggrieved patriarchs tired of being ignored. It’s true that AI is here, and there’s no undoing that. Maintaining our humanity in the face of automated machinery is a tiresome test, one that will require a variety of tactics. Perhaps too few of them are to be found in this book’s pages.

This is an excerpt from a Washington Post story.

If Anyone Builds It, Everyone Dies

By Eliezer Yudkowsky and Nate Soares (Little, Brown, $30)